Weather Predicting Models using ML algorithms

How to make machine fit and predict weather using datasets

Recently, I was working on weather predicting models using ML algorithms, and so to just start with, I decided to use weather data of some random city and use it to visualize and predict weather conditions. I searched for some datasets from kaggle, and luckily found weather data of Delhi. Although we have Regression, Classification models, but I choose to work upon Classification models like Decision Tree Classification, K-Nearest Neighbors (KNN) Classification, Support Vector Machine (SVM) and Random Forest Classification models to predict weather conditions of Delhi.

I downloaded the weather dataset, and starts by converting it into pandas dataframe.

Now as we look into the data, in form of dataframe, we may find out that there are many missing values( in form of NaN values), which are always the case with real world data. We have two options in here, either we can remove the rows with missing/NaN values, or we may replace them with the mean value of the column. As I thought, it would be more convenient to replace the NaN values with mean values, so the following code does the same for me.

I find a column with only NaN values, hence I decided to remove the column from dataframe.

Replacing the NaN values with the mean values of the column, or removing them comes under Data Cleaning. After the data is cleaned, I thought of visualizing the categorical data.

Likewise, we can visualize for hail, rain, thunder and wind direction. We now have two columns ‘ _conds’ and ‘ _wdire’ containing NaN values, so by using preprocessing.LabelEncoder(), we can convert them into numerical values, and after this if any NaN value is present, we can drop the rows as follows.

df = df.dropna()After this our data is ready to be used for Training and Testing. But before converting the data into training and testing sets, we first convert pandas dataframe to numpy array, so as to operate scikit-learn.

Now we divide the dataset into Training and Testing sets.

Upto here, the procedure for all the classification models are same, as we had just retrieved data, visualized data, done some cleaning of data and split the data into Training and Testing sets.

Now as per our choice, we can use any of the above mentioned classification model to fit and predict. If we have to use Decision Tree Classification Model, then the further approach will be as follows.

- Importing Decision Tree Classifier and fit the model using training dataset.

2. Now testing the model using test dataset and print summary of prediction using confusion matrix and classification report.

Now to check the accuracy of the model we can employ the below line of code.

As per Wikipedia, Random forests or random decision forests are an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes or mean prediction of the individual trees. Random forest proves to be much better than decision trees in case we are dealing with large datasets. The procedure to make a random forest model is as follows.

- Importing Random Forest Classifier and fit the model using training dataset.

The following line make predictions using test dataset and trained model.

rfc_predict = rfc.predict(X_test)And the following code prints the summary of prediction results.

The accuracy of the model can be evaluated as follows.

As stated before, this article covers decision tree, random forest, KNN, and SVM, although i would have written four different articles, stating each one of them separately, but when it comes to talk only about the basic algorithms, it turns out that there is only a slight difference between all of these.

In case of KNN, after data cleaning we proceed as follows.

- Importing K-Neighbor Classifier and training the model using test dataset.

2. Now testing the model using test dataset and print summary of prediction using confusion matrix and classification report.

In this case, we take the K-value = 10. Although, we can find out the most suitable k-value, but in this case as the dataset is a bit large, hence we go with the observed most suitable k-value in most cases, which is something close to sqrt(N), where N is number of datapoints. In this case, it is something around 131.

After this, use can easily predict using test data, and also can calculate the accuracy of the model. Of course, if you want, you can play around target variables to predict different targets.

And here comes the last but not the least predictive model i.e SVM. After done with data cleaning, we proceed as follows.

- Importing Support Vector Classifier and training the model using test dataset.

2. Now testing the model using test dataset and print summary of prediction using confusion matrix and classification report.

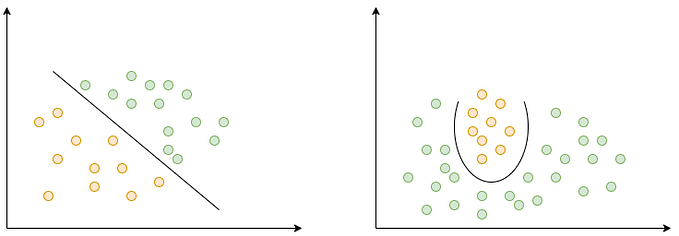

Understanding whether the machine learning task is a regression or classification problem is key for selecting the right algorithm to use. In this article, I only discussed about classification models. I am looking forward to publish something about regression models and stuffs as well.

Wrapping it up for now.

Do you have any questions or doubts?

Feel free to post below.